Adaptively Regulating Privacy as Contextual Integrity

Adaptively Regulating Privacy as Contextual Integrity

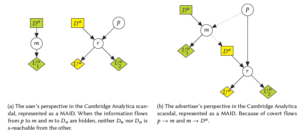

In this project, Dr. Benthall & Dr. Sivan-Sevilla suggest a new model for regulating privacy. Recently, the practice of regulating privacy, largely based on theories of privacy as control or secrecy, has come under scrutiny. The notice and consent paradigm has proven ineffective in the face of opaque technologies and managerialist reactions by the market. They propose an alternative regulatory model for privacy pivoted around the definition of privacy as Contextual Integrity (CI). Regulating according to CI involves operationalizing the social goods at stake and modeling how appropriate information flow promotes those goods. The social scientific modeling process is informed, deployed, and evaluated through agile regulatory processes – adaptive regulation – in three learning cycles: (a) the assessment of new risks, (b) real-time monitoring of existing threat actors, and (c) validity assessment of existing regulatory instruments. At the core of their proposal is Regulatory CI, a formalization of Contextual Integrity in which information flows are modeled and audited using Bayesian networks and causal game theory. They use the Cambridge Analytica scandal to demonstrate existing gaps in current regulatory paradigms and the novelty of our proposal.

Intermediation for/against Accountability: A comparative analysis of formal & informal US privacy watchdogs

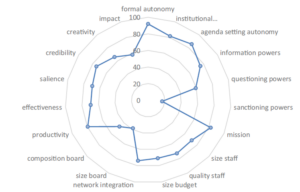

In this project, Dr. Sivan-Sevilla is trying to assess the impact of ‘privacy intermediaries’ on effective accountability across the US privacy regime. Those US privacy intermediaries include Privacy NGOs, Tech Whistleblowers, Tech Journalists, Academic Researchers, FISA Courts, and Congressional Committees who have been active and trying to hold privacy threat actors into account. They work to advance consumer privacy vis-a-vis corporations and fight government surveillance vis-a-vis the security establishment. They work both formally & informally, but it is still unclear to what extent they are promoting meaningful accountability by threat actors. According to the literature, the political context, capacity of the regulator, strength of intermediary networks, and level of formality all contribute to the extent to which intermediaries will be able to promote ‘meaningful’ accountability. For the broken privacy regime in the US, the impact of regulatory intermediation is unclear. This project asks: (1) How formal and informal US privacy watchdogs hold US government and corporations into account? (2) How can we explain different levels of accountability powers across public vs. private threat actors & vis-à-vis formal vs. informal privacy watchdogs? (3) What are the consequences of varying accountability powers to the individual rights of data subjects? ‘Accountability Powers’ of privacy intermediaries are assessed based on their authority, resources, and application of power. Through a questionnaire that includes accountability index indicators, in-depth interviews, and desk research of publicly available documents from various privacy watchdogs, this project aims to shed light on whether and how effective privacy accountability emerges in the US. Preliminary results show that privacy intermediation for digital accountability works in creative, innovative, and informal ways, when privacy threat actors are less ready for scrutiny & oversight.

First-party data and the management of privacy in a new digital advertising

First-party data and the management of privacy in a new digital advertising

ecosystem

In this project, Patrick Parham seeks to understand the management of privacy in a post third-party cookie digital advertising ecosystem as privacy pressures on the online advertising industry have led organizations to consider shifting from third- to first-party data solutions to navigate privacy concerns while satisfying business interests. Consequently, individual advertisers are assuming an increasingly important weight on consumers’ privacy decisions, deciding how to target segments of the population for advertisements assembled from first-party data instead of defaulting to the capabilities of primary adtech platforms. Specifically, advertisers, who are not used to having such central privacy roles, become ultimately responsible for putting privacy preserving first-party data solutions to work. This proposed project examines how advertisers currently address privacy in their work and also manage these developing privacy preserving solutions that leverage first-party data for advertising. This work investigates the experience of advertiser employees at the business process-level responsible for coordinating both targeting assembled from first party-data and privacy in the process, through a thematic analysis based on semi-structured interviews. Additionally, this research looks to understand the efforts of the industry’s trade organization developed standards and guidance related to first-party data practices and associated privacy-preserving solutions on adverisers’ disparate attempts to coordinate privacy. To do so, a critical discourse analysis is performed based on assembling a corpus comprising standards, guidance, technical documentation, and press releases from an individual industry trade organization’s privacy initiatives.